One of the features that you will not find in most Platform as a Service solutions is writing to file system. Writing to file system is very important as you need it in case you want to write user uploaded content to file system, or write lucene index or read some configuration file from a directory. OpenShift Express from the start support writing to file system.

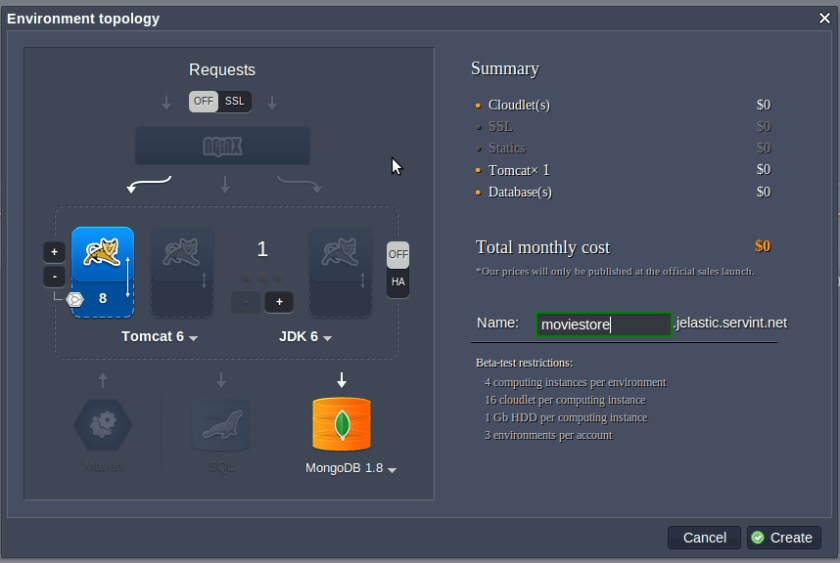

In this blog I will create a simple Spring MVC MongoDB application which store movie documents. I will be using Spring Roo to quickly scaffold the application. Spring Roo does not provide file upload functionality so I will modify the default application to add that support. Then I will deploy the application to OpenShift Express.

Creating a OpenShift Express JBoss AS7 application

The first step is to create the JBoss AS7 applictaion in OpenShift Express. To do that type the command as shown below. I am assuming you have OpenShift Express Ruby gem installed on your machine.

rhc-create-app -l <rhlogin email> -a movieshop -t jbossas-7.0 -d

This will create a sample Java web application which you can view at http://movieshop-<namespace>.rhcloud.com.

Adding support for MongoDB Cartridge

As we are creating Spring MongoDB application we should add support for MongoDB by executing the command as shown below.

rhc-ctl-app -l <rhlogin email> -a movieshop -e add-mongodb-2.0 -d

Removing default generated file from git

We don’t need the default generated files so remove them by executing following commands.

git rm -rf src pom.xml

git commit -a -m "removed default generated files"

Creating Spring MVC MongoDB MovieShop Application

Fire the Roo shell and execute the following commands to create the application.

project --topLevelPackage com.xebia.movieshop --projectName movieshop --java 6

mongo setup

entity mongo --class ~.domain.Movie

repository mongo --interface ~.repository.MovieRepository

service --interface ~.service.MovieService

field string --fieldName title --notNull

field string --fieldName description --notNull --sizeMax 4000

field string --fieldName stars --notNull

field string --fieldName director --notNull

web mvc setup

web mvc all --package ~.web

Adding file upload support

Add two fields to Movie entity as shown below.

@Transient

private CommonsMultipartFile file;

private String fileName;

public CommonsMultipartFile getFile() {

return this.file;

}

public void setFile(CommonsMultipartFile file) {

this.file = file;

}

public String getFileName() {

return fileName;

}

public void setFileName(String fileName) {

this.fileName = fileName;

}

Edit the create.jspx file as shown below to add file upload as shown below.

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<div xmlns:c="http://java.sun.com/jsp/jstl/core" xmlns:field="urn:jsptagdir:/WEB-INF/tags/form/fields" xmlns:form="urn:jsptagdir:/WEB-INF/tags/form" xmlns:jsp="http://java.sun.com/JSP/Page" xmlns:spring="http://www.springframework.org/tags" version="2.0">

<jsp:directive.page contentType="text/html;charset=UTF-8"/>

<jsp:output omit-xml-declaration="yes"/>

<form:create id="fc_com_xebia_movieshop_domain_Movie" modelAttribute="movie" path="/movies" render="${empty dependencies}" z="wysyQcUIaJOAUzNYNVt5nMEdvHk=" multipart="true">

<field:input field="title" id="c_com_xebia_movieshop_domain_Movie_title" required="true" z="SpYrTojoyx2F7X5CjEfFQ6CBdA4="/>

<field:textarea field="description" id="c_com_xebia_movieshop_domain_Movie_description" required="true" z="vxiB62k7E7FzhnVz1kU7CCIYEkw="/>

<field:input field="stars" id="c_com_xebia_movieshop_domain_Movie_stars" required="true" z="XdvY0mpBitMGzrARD3TmTxxXZHg="/>

<field:input field="director" id="c_com_xebia_movieshop_domain_Movie_director" required="true" z="6L8yvzx1cZgTq0QKP1dHbGHbQxI="/>

<field:input field="file" id="c_com_shekhar_movieshop_domain_Movie_file" label="Upload image" type="file" z="user-managed"/>

<field:input field="fileName" id="c_com_xebia_movieshop_domain_Movie_fileName" z="user-managed" render="false"/>

</form:create>

<form:dependency dependencies="${dependencies}" id="d_com_xebia_movieshop_domain_Movie" render="${not empty dependencies}" z="nyAj+bBGTpzOr2SwafD6lx7vi30="/>

</div>

Also change the input.tagx file to add support for input type file. Add the following line as shown below

<c:when test="${type eq 'file'}">

<form:input id="_${sec_field}_id" path="${sec_field}" disabled="${disabled}" type="file"/>

</c:when>

Modify the MovieController to write file either to the OpenShift_DATA_DIR or my local machine in case System.getEnv(“OPENSHIFT_DATA_DIR”) is null. The code is shown below.

import java.io.File;

import java.io.FileInputStream;

import java.io.UnsupportedEncodingException;

import java.math.BigInteger;

import javax.servlet.ServletOutputStream;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import javax.validation.Valid;

import org.apache.commons.io.IOUtils;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.roo.addon.web.mvc.controller.scaffold.RooWebScaffold;

import org.springframework.stereotype.Controller;

import org.springframework.ui.Model;

import org.springframework.validation.BindingResult;

import org.springframework.web.bind.annotation.PathVariable;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestMethod;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.multipart.commons.CommonsMultipartFile;

import org.springframework.web.util.UriUtils;

import org.springframework.web.util.WebUtils;

import com.xebia.movieshop.domain.Movie;

import com.xebia.movieshop.service.MovieService;

@RequestMapping("/movies")

@Controller

@RooWebScaffold(path = "movies", formBackingObject = Movie.class)

public class MovieController {

private static final String STORAGE_PATH = System.getEnv("OPENSHIFT_DATA_DIR") == null ? "/home/shekhar/tmp/" : System.getEnv("OPENSHIFT_DATA_DIR");

@Autowired

MovieService movieService;

@RequestMapping(method = RequestMethod.POST, produces = "text/html")

public String create(@Valid Movie movie, BindingResult bindingResult,

Model uiModel, HttpServletRequest httpServletRequest) {

if (bindingResult.hasErrors()) {

populateEditForm(uiModel, movie);

return "movies/create";

}

CommonsMultipartFile multipartFile = movie.getFile();

String orgName = multipartFile.getOriginalFilename();

uiModel.asMap().clear();

System.out.println(orgName);

String[] split = orgName.split("\\.");

movie.setFileName(split[0]);

movie.setFile(null);

movieService.saveMovie(movie);

String filePath = STORAGE_PATH + orgName;

File dest = new File(filePath);

try {

multipartFile.transferTo(dest);

} catch (Exception e) {

throw new RuntimeException(e);

}

return "redirect:/movies/"

+ encodeUrlPathSegment(movie.getId().toString(),

httpServletRequest);

}

@RequestMapping(value = "/image/{fileName}", method = RequestMethod.GET)

public void getImage(@PathVariable String fileName, HttpServletRequest req, HttpServletResponse res) throws Exception{

File file = new File(STORAGE_PATH+fileName+".jpg");

res.setHeader("Cache-Control", "no-store");

res.setHeader("Pragma", "no-cache");

res.setDateHeader("Expires", 0);

res.setContentType("image/jpg");

ServletOutputStream ostream = res.getOutputStream();

IOUtils.copy(new FileInputStream(file), ostream);

ostream.flush();

ostream.close();

}

Also change the show.jspx file to display the image.

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<div xmlns:field="urn:jsptagdir:/WEB-INF/tags/form/fields" xmlns:jsp="http://java.sun.com/JSP/Page" xmlns:page="urn:jsptagdir:/WEB-INF/tags/form" version="2.0">

<jsp:directive.page contentType="text/html;charset=UTF-8"/>

<jsp:output omit-xml-declaration="yes"/>

<page:show id="ps_com_xebia_movieshop_domain_Movie" object="${movie}" path="/movies" z="2GhOPmD72lRGGsTvy9DYx8/b/b4=">

<field:display field="title" id="s_com_xebia_movieshop_domain_Movie_title" object="${movie}" z="L3rzNq9mt4vOBL/2S9L5XTn4pGA="/>

<field:display field="description" id="s_com_xebia_movieshop_domain_Movie_description" object="${movie}" z="rctpFQukL584DSNTEhcZ/zqm19U="/>

<field:display field="stars" id="s_com_xebia_movieshop_domain_Movie_stars" object="${movie}" z="Mi3QNsQkI5hqOVW44XwXAGF2zKE="/>

<field:display field="director" id="s_com_xebia_movieshop_domain_Movie_director" object="${movie}" z="rhXx3l+3zMxx0O0ht2Td3Icx1ZE="/>

<field:display field="fileName" id="s_com_xebia_movieshop_domain_Movie_fileName" object="${movie}" z="7XTMedYLsWVvZkq2fKT0EZpZaPE="/>

<IMG alt="${movie.fileName}" src="/movieshop/movies/image/${movie.fileName}" />

</page:show>

</div>

Finally change the webmvc-config.xml to have CommonsMultipartResolver bean as shown below.

<bean

class="org.springframework.web.multipart.commons.CommonsMultipartResolver"

id="multipartResolver" >

<property name="maxUploadSize" value="100000"></property>

</bean>

Pointing to OpenShift MongoDB datastore

Change the applicationContext-mongo.xml to point to OpenShift MongoDB instance as shown below.

<mongo:db-factory dbname="${mongo.name}" host="${OPENSHIFT_NOSQL_DB_HOST}"

port="${OPENSHIFT_NOSQL_DB_PORT}" username="${OPENSHIFT_NOSQL_DB_USERNAME}"

password="${OPENSHIFT_NOSQL_DB_PASSWORD}" />

Add OpenShift Maven Profile

OpenShift applications require maven profile called openshift which is executed when git push is done.

<profiles>

<profile>

<!-- When built in OpenShift the 'openshift' profile will be used when

invoking mvn. -->

<!-- Use this profile for any OpenShift specific customization your app

will need. -->

<!-- By default that is to put the resulting archive into the 'deployments'

folder. -->

<!-- http://maven.apache.org/guides/mini/guide-building-for-different-environments.html -->

<id>openshift</id>

<build>

<finalName>movieshop</finalName>

<plugins>

<plugin>

<artifactId>maven-war-plugin</artifactId>

<version>2.1.1</version>

<configuration>

<outputDirectory>deployments</outputDirectory>

<warName>ROOT</warName>

</configuration>

</plugin>

</plugins>

</build>

</profile>

</profiles>

Deploying Application to OpenShift

finally do git push to deploy the application to OpenShift and you can view the application running at http://movieshop-random.rhcloud.com/