This is third post in my series of posts on MongoDB. This post will talk about how working set affects performance of MongoDB. The idea of this experiment came to me after I read a very good blog from Colin Howe on MongoDB Working Set. The tests performed in this blog are on similar lines as the ones talked by Colin Howe but performed with Java and MongoDB version 2.0.1. If you have worked with MongoDB or read about it you might have heard of the term Working Set. Working Set is the amount of data(including indexes) that will be in used by your application and if this data fits in RAM then the application performance will be great else it would degrade drastically When the data can’t fit in RAM MongoDB has to hit disk which impacts performance. I recommend reading blog from Adrian Hills on the importance of Working Set. To help you understand working set better I am citing the example from Adrian blog :

Suppose you have 1 year’s worth of data. For simplicity, each month relates to 1GB of data giving 12GB in total, and to cover each month’s worth of data you have 1GB worth of indexes again totalling 12GB for the year.

If you are always accessing the last 12 month’s worth of data, then your working set is: 12GB (data) + 12GB (indexes) = 24GB.

However, if you actually only access the last 3 month’s worth of data, then your working set is: 3GB (data) + 3GB (indexes) = 6GB.

From the example above if your machine has more than 6GB RAM then your application will perform great otherwise it will be slow. The important thing to know about working set is that MongoDB uses LRUstrategy to decide which documents are in RAM and you can’t tell MongoDB to keep a particular document or collection in RAM. Now that you know what is working set and how important it is let’s start the experiment.

Setup

Dell Vostro Ubuntu 11.04 box with 4 GB RAM and 300 GB hard disk. Java 6 MongoDB 2.0.1 Spring MongoDB 1.0.0.M5 which internally uses MongoDB Java driver 2.6.5 version.

Document

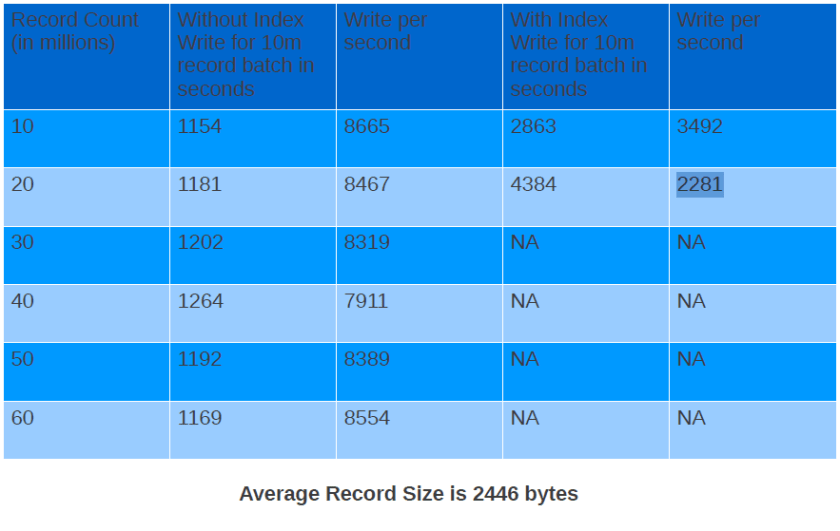

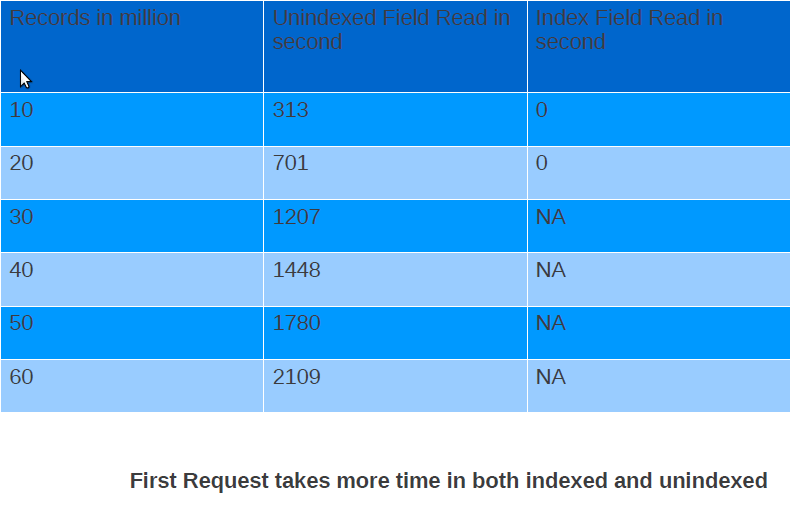

The documents I am storing in MongoDB looks like as shown below. The average document size is 2400 bytes. Please note the _id field also has an index. The index that I will be creating will be on name field.

{

"_id" : ObjectId("4ed89c140cf2e821d503a523"),

"name" : "Shekhar Gulati",

"someId1" : NumberLong(1000006),

"str1" : "U",

"date1" : ISODate("1997-04-10T18:30:00Z"),

"index" : 1,

"bio" : "I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a

Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java

Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I

am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java

Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I

am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java

Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I

am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java

Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I

am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java

Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I

am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java

Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I

am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java

Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I

am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java

Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I

am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java Developer. I am a Java

Developer. I am a Java Developer. "

}

Test Case

The test case will run 6 times with 10k, 100k, 1 million,2 million, 3 million, and 10 million records. The document used is shown under document heading and is the same as the one used in first post just with one extra field index(a simple int field which just auto increments by one) . Before inserting the records index is created on index field and then records are inserted in batch of 100 records. Finally 30000 queries are performed on a selected part of the collection. The selection varies from 1% of the data in the collection to 100% of the collection. The queries were performed 3 times on the selected dataset to give MongoDB chance to put the selected dataset in RAM. The JUnit test case is shown below.

@Test

public void workingSetTests() throws Exception {

benchmark(10000);

cleanMongoDB();

benchmark(100000);

cleanMongoDB();

benchmark(1000000);

cleanMongoDB();

benchmark(2000000);

cleanMongoDB();

benchmark(3000000);

cleanMongoDB();

benchmark(10000000);

cleanMongoDB();

}

private void benchmark(int totalNumberOfKeys) throws Exception {

IndexDefinition indexDefinition = new Index("index", Order.ASCENDING)

.named("index_1");

mongoTemplate.ensureIndex(indexDefinition, User.class);

int batchSize = 100;

int i = 0;

long startTime = System.currentTimeMillis();

LineIterator iterator = FileUtils.lineIterator(new File(FILE_NAME));

while (i < totalNumberOfKeys && iterator.hasNext()) {

List users = new ArrayList();

for (int j = 0; j < batchSize; j++) {

String line = iterator.next();

User user = convertLineToObject(line);

user.setIndex(i);

users.add(user);

i += 1;

}

mongoTemplate.insert(users, User.class);

}

long endTime = System.currentTimeMillis();

logger.info(String.format("%d documents inserted took %d milliseconds", totalNumberOfKeys, (endTime - startTime)));

performQueries(totalNumberOfKeys, 1);

performQueries(totalNumberOfKeys, 1);

performQueries(totalNumberOfKeys, 1);

performQueries(totalNumberOfKeys, 10);

performQueries(totalNumberOfKeys, 10);

performQueries(totalNumberOfKeys, 10);

performQueries(totalNumberOfKeys, 100);

performQueries(totalNumberOfKeys, 100);

performQueries(totalNumberOfKeys, 100);

String collectionName = mongoTemplate

.getCollectionName(User.class);

CommandResult stats = mongoTemplate.getCollection(collectionName)

.getStats();

logger.info("Stats : " + stats);

double size = stats.getDouble("storageSize");

logger.info(String

.format("Storage Size : %.2f M", size / (1024 * 1024)));

}

private void performQueries(int totalNumberOfKeys, int focus) {

int gets = 30000;

long startTime = System.currentTimeMillis();

for (int index = 0; index < gets; index++) {

Random random = new Random();

boolean focussedGet = random.nextInt(100) != 0;

int key = 0;

if (focussedGet) {

key = random.nextInt((totalNumberOfKeys * focus) / 100);

} else {

key = random.nextInt(totalNumberOfKeys);

}

mongoTemplate.findOne(Query.query(Criteria.where("index").is(key)),

User.class);

}

long endTime = System.currentTimeMillis();

logger.info(String.format("%d gets (focussed on bottom %d%%) took %d milliseconds", gets,focus, (endTime - startTime)));

}

Results

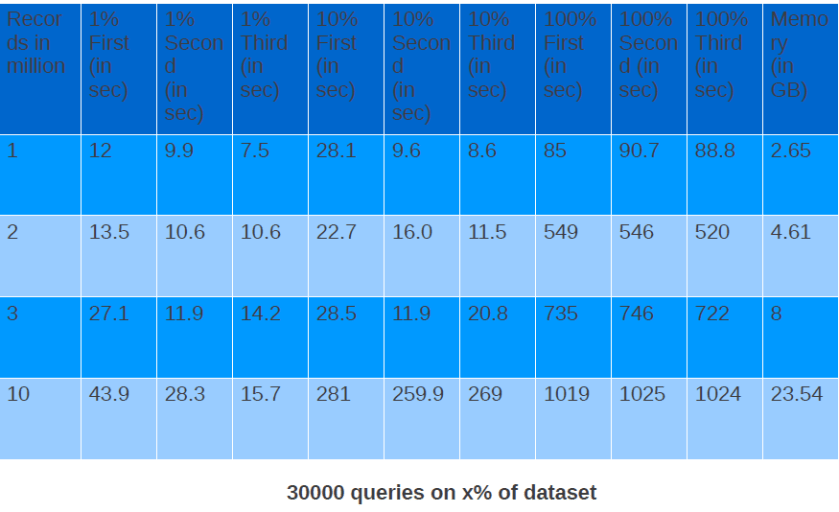

In the table above number of records are in million and time to do 30k queries is in seconds.

One thing that this data clearly shows is that if you have you have working set which can fit in RAM performance almost remains same agnostic of total number of documents in MongoDB. This can easily be seen by comparing 3 run of 1 % dataset of all dataset values. Performance of 30k queries on 1% of dataset on both 3 million records and 10 million records are very close.