I was reading Concise Thoughts: Impact of Output Length on LLM Reasoning and Cost paper today and thought of applying it to a problem I solved a couple of months back. This paper introduced the Constrained Chain of Thought (CCoT) prompting technique as an optimization over Chain of Thought Prompting.

Chain of Thought prompting is a technique that encourages LLMs to generate responses by breaking down complex problems into smaller, sequential steps. This technique enhances reasoning and improves the model’s ability to arrive at accurate conclusions by explicitly outlining the thought process involved in solving a problem or answering a question.

When using Zero-shot CoT technique, adding the line “Let’s think a bit step by step” to the prompt encourages the model to first generate reasoning thoughts and then come up with an answer. In Zero-shot CCoT, the prompt is changed to limit the number of words, such as “Let’s think a bit step by step and limit to N words.” Here, N can be any suitable number for your problem.

The paper showed results with N being 15, 30, 45, 60, and 100. CCoT was equal or better than CoT for N 60 and 100. As mentioned in the paper, CCoT technique works with large models but not with smaller ones. If CCoT works as written in the paper, it leads to improved latency, less token usage, more coherent responses, and reduced cost.

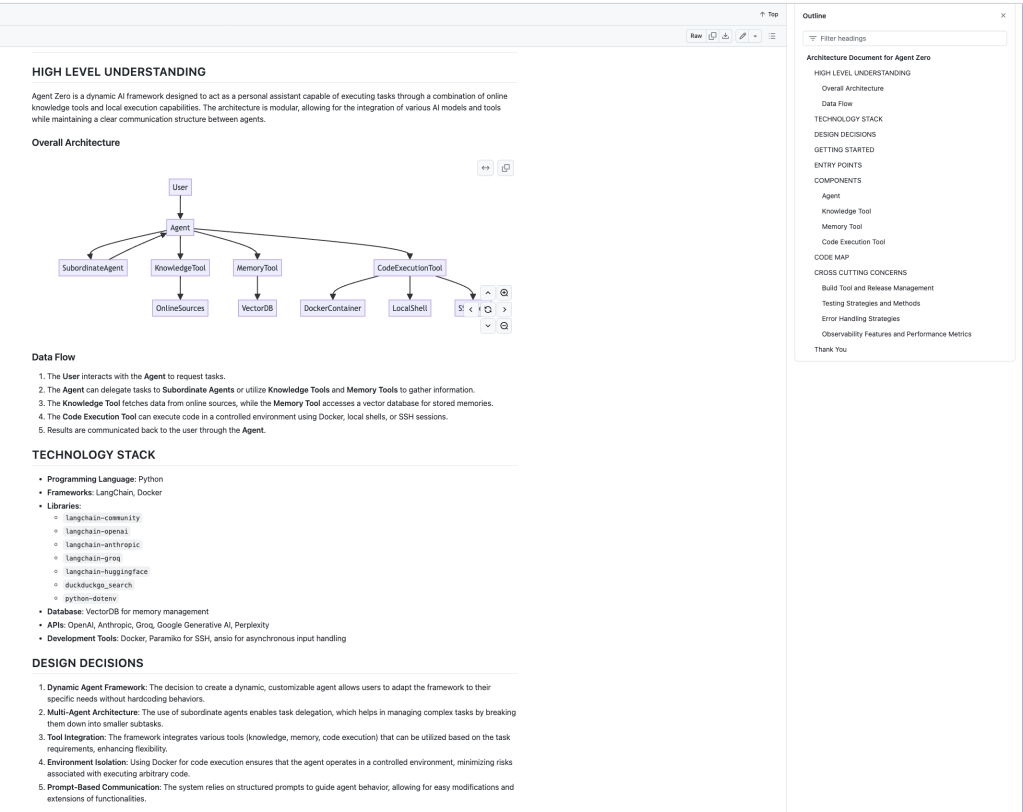

One of the interesting LLM use cases I solved lately was building RAG over table data. In that project, we did extensive pre-processing of the documents at the ingestion time so that during inference/query time, we first find the right table in the right format and then answer the query using a well-crafted prompt and tools usage.

In this post, I will show you how to do Question Answering over a single table without any pre-processing. We will take a screenshot of the table and then use OpenAI’s vision model (gpt-4o and gpt-4o-mini) to generate answers using three prompting techniques: plain prompting, CoT (Chain of Thought) prompting, and CCoT (Constrained Chain of Thought) prompting.

Continue reading “Putting Constrained-CoT Prompting Technique to the Test: A Real-World Experiment”