I recently came across an insightful article by Drew Breunig that introduces a compelling framework for categorizing the use cases of Generative AI (Gen AI) and Large Language Models (LLMs). He classifies these applications into three distinct categories: Gods, Interns, and Cogs. Each bucket represents a different level of automation and complexity, and it’s fascinating to consider how these categories are shaping the AI landscape today.

Continue reading “Gods, Interns, and Cogs: A useful framework to categorize AI use cases”Category: generative-ai

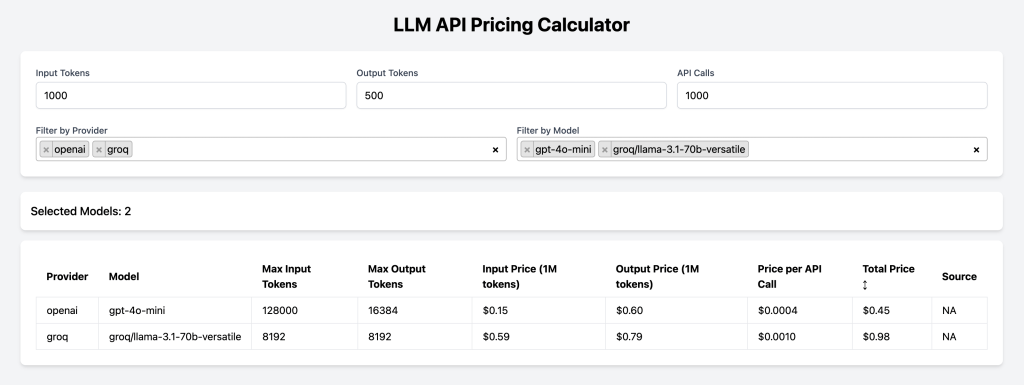

LLM API Pricing Calculator

I’ve recently begun building small HTML/JS web apps. These are all client-side only. Most of these tools are initially generated using ChatGPT in under 30 minutes. One example is my LLM API price calculator (https://tools.o14.ai/llm-cost-calculator.html), which I generated. While there are other LLM API pricing calculators available, I build/generate these tools for several reasons:

- Known User Base: I have a need for the tool myself, so I already have the first user.

- Rapid Prototyping: Experimenting with the initial idea is frictionless. Building the first version typically takes only 5-8 prompts.

- Focus on Functionality: There’s no pressure to optimize the code at first. It simply needs to function.

- Iterative Development: Using the initial version reveals additional features needed. For instance, the first iteration of the LLM API pricing calculator only displayed prices based on the number of API calls and input/output tokens. As I used it, I realized the need for filtering by provider and model, so I added those functionalities.

- Privacy: I know no one is tracking me.

- Learning by Doing: This process allows me to learn about both the strengths and limitations of LLMs for code generation.

The LLM API pricing calculator shows the pricing of different LLM APIs as shown below. You can look at the source code by using browser view source.

Webpage: https://tools.o14.ai/llm-cost-calculator.html

The prompt that generated the first version of the app is shown below. I also provided an image showing how I want UI to look.

We want to build a simple HTML Javascript based calculator as shown in the image. The table data will come from a remote JSON at location https://raw.githubusercontent.com/BerriAI/litellm/refs/heads/main/model_prices_and_context_window.json. The structure of JSON looks like as shown below. We will use tailwind css. Generate the code

{

"gpt-4": {

"max_tokens": 4096,

"max_input_tokens": 8192,

"max_output_tokens": 4096,

"input_cost_per_token": 0.00003,

"output_cost_per_token": 0.00006,

"litellm_provider": "openai",

"mode": "chat",

"supports_function_calling": true

},

"gpt-4o": {

"max_tokens": 4096,

"max_input_tokens": 128000,

"max_output_tokens": 4096,

"input_cost_per_token": 0.000005,

"output_cost_per_token": 0.000015,

"litellm_provider": "openai",

"mode": "chat",

"supports_function_calling": true,

"supports_parallel_function_calling": true,

"supports_vision": true

},

}

This API pricing calculator is based on pricing information maintained by LiteLLM project. You can look at all the prices here https://github.com/BerriAI/litellm/blob/main/model_prices_and_context_window.json. In my experience the pricing information is not always latest so you should always confirm the latest price from the provider pricing page.

Monkey patching autoevals to show token usage

I use autoevals library to write evals for evaluating output of LLMs. In case you have never written an eval before let me help you understand it with a simple example. Let’s assume that you are building a quote generator where you ask a LLM to generate inspirational Steve Jobs quote for software engineers.

Continue reading “Monkey patching autoevals to show token usage”Prompt Engineering Lessons We Can Learn From Claude System Prompts

Anthropic published Claude’s System prompts on their documentation website this week. Users spend countless hours getting AI assistants to leak their system prompts. So, Anthropic publishing system prompt in open suggest two things: 1) Prompt leakage is less of an attack vector than most people think 2) any useful real world GenAI application is much more than just the system prompt (They are compound AI systems with a user friendly UX/interface/features, workflows, multiple search indexes, and integrations).

Compound AI systems, as defined by the Berkeley AI Research (BAIR) blog, are systems that tackle AI tasks by combining multiple interacting components. These components can include multiple calls to models, retrievers or external tools. Retrieval augmented generation (RAG) applications, for example, are compound AI systems, as they combine (at least) a model and a data retrieval system. Compound AI systems leverage the strengths of various AI models, tools and pipelines to enhance performance, versatility and re-usability compared to solely using individual models.

Anthropic has released system prompts for three models – Claude 3.5 Sonnet, Claude 3 Opus, and Claude 3 Haiku. We will look at Claude 3.5 Sonnet system prompt (July 12th, 2024) . The below system prompt is close to 1200 input tokens long.

Continue reading “Prompt Engineering Lessons We Can Learn From Claude System Prompts”Using ffmpeg, yt-dlp, and gpt-4o to Automate Extraction and Explanation of Python Code from YouTube Videos

Today I was watching a video on LLM evaluation https://www.youtube.com/watch?v=SnbGD677_u0. It is a long video(2.5 hours) with multiple sections. There are multiple speakers covering different sections. In one of the sections speaker showed code in Jupyter notebooks. Because of the small font and pace at which speaker was talking it was hard to follow the section.

I was thinking if I could use youtube-dlp along with an LLM to solve this problem. This is what I want to do:

- Download the specific section of a video

- Take screenshot of different frames in that video section

- Send the screenshots to LLM to extract code

- Ask LLM to explain the code in a step by step manner

How I use LLMs: Building a Tab Counter Chrome Extension

Last night, I found myself overwhelmed by open tabs in Chrome. I wondered how many I had open, but couldn’t find a built-in tab counter. While third-party extensions likely existed, I am not comfortable installing them.

Having built Chrome extensions before (I know, it’s possible in a few hours!), the process usually frustrates me. Figuring out permissions, content scripts vs. service workers, and icon creation (in various sizes) consumes time. Navigating the Chrome extension documentation can be equally daunting.

These “nice-to-have” projects often fall by the wayside due to the time investment. After all, I can live without a tab counter.

LLMs(Large Language Models) help me build such projects. Despite their limitations, they significantly boost my productivity on such tasks. Building a Chrome extension isn’t about resume padding; it’s about scratching an itch. LLMs excel in creating these workflow-enhancing utilities with automation. I use them to write single-purpose bash scripts, python scripts, and Chrome extensions. You can find some of my LLM wrapper tools on GitHub here.

Continue reading “How I use LLMs: Building a Tab Counter Chrome Extension”Building a Bulletproof Prompt Injection Detector using SetFit with Just 32 Examples

In my previous post we built Prompt Injection Detector by training a LogisticRegression classifier on embeddings of SPML Chatbot Prompt Injection Dataset. Today, we will look at how we can fine-tune an embedding model and then use LogisticRegression classifier. I learnt this technique from Chatper 11 of Hands-On Large Language Models book. I am enjoying this book. It is practical take on LLMs and teaches you many practical and useful techniques that can one can apply in their work.

We can fine-tune an embedding on the complete dataset or few examples. In this post we will look at fine tuning for few shot classification. This technique shines when you have only a dozen or so examples in your dataset.

I fine-tuned the model on RunPod https://www.runpod.io/. It costed me 36 cents to fine tune and evaluate the model. I used 1 x RTX A5000 machine that has 16 vCPU and 62 GB RAM.

Continue reading “Building a Bulletproof Prompt Injection Detector using SetFit with Just 32 Examples”A look at Patchwork: YC backed LLM Startup

In the last couple of days, I’ve spent some hours playing with Patchwork. Patchwork is an open-source framework that leverages AI to accelerate asynchronous development tasks like code reviews, linting, patching, and documentation. It is a Y Combinator backed company.

The GitHub repository for Patchwork can be found here: https://github.com/patched-codes/patchwork.

Patchwork offers two ways to use it. One is through their open-source CLI that utilizes LLMs like OpenAI to perform tasks. You can install the CLI using the following command:

pip install 'patchwork-cli[all]' --upgrade

The other option is to use their cloud offering at https://app.patched.codes/signin. There, you can either leverage predefined workflows or create your own using a visual editor.

This post focuses on my experience with their CLI tool, as I haven’t used their cloud offering yet.

Patchwork comes bundled with six patchflows:

- GenerateDocstring: Generates docstrings for methods in your code.

- AutoFix: Generates and applies fixes to code vulnerabilities within a repository.

- PRReview: Upon PR creation, extracts code diffs, summarizes changes, and comments on the PR.

- GenerateREADME: Creates a README markdown file for a given folder to add documentation to your repository.

- DependencyUpgrade: Updates your dependencies from vulnerable versions to fixed ones.

- ResolveIssue: Identifies the files in your repository that need updates to resolve an issue (or bug) and creates a PR to fix it.

A patchflow is composed of multiple steps. These steps are python code.

To understand how Patchwork works, we’ll explore a couple of predefined Patchflows.

Continue reading “A look at Patchwork: YC backed LLM Startup”Meeting Long-Tail User Needs with LLMs

Today I was watching a talk by Maggie Appleton from local-first conference. She points out in her insightful talk on homecooked software and barefoot developers, there exists a significant gap in addressing long-tail user needs—those specific requirements of a small group that big tech companies often overlook. This disconnect stems primarily from the industrial software approach, which prioritizes scalability and profitability over the nuanced, localized solutions that users truly require.

The limitations of existing software from big tech companies become evident when we analyze their inability to address the long-tail of user needs. FAANG companies focus on creating solutions that appeal to the mass market, often sidelining niche requirements. For example, Google Maps can efficiently direct users from one location to another, but it fails to offer features like tracking historical site boundaries that may be crucial for a historian or a local community leader.

Continue reading “Meeting Long-Tail User Needs with LLMs”Putting Constrained-CoT Prompting Technique to the Test: A Real-World Experiment

I was reading Concise Thoughts: Impact of Output Length on LLM Reasoning and Cost paper today and thought of applying it to a problem I solved a couple of months back. This paper introduced the Constrained Chain of Thought (CCoT) prompting technique as an optimization over Chain of Thought Prompting.

Chain of Thought prompting is a technique that encourages LLMs to generate responses by breaking down complex problems into smaller, sequential steps. This technique enhances reasoning and improves the model’s ability to arrive at accurate conclusions by explicitly outlining the thought process involved in solving a problem or answering a question.

When using Zero-shot CoT technique, adding the line “Let’s think a bit step by step” to the prompt encourages the model to first generate reasoning thoughts and then come up with an answer. In Zero-shot CCoT, the prompt is changed to limit the number of words, such as “Let’s think a bit step by step and limit to N words.” Here, N can be any suitable number for your problem.

The paper showed results with N being 15, 30, 45, 60, and 100. CCoT was equal or better than CoT for N 60 and 100. As mentioned in the paper, CCoT technique works with large models but not with smaller ones. If CCoT works as written in the paper, it leads to improved latency, less token usage, more coherent responses, and reduced cost.

One of the interesting LLM use cases I solved lately was building RAG over table data. In that project, we did extensive pre-processing of the documents at the ingestion time so that during inference/query time, we first find the right table in the right format and then answer the query using a well-crafted prompt and tools usage.

In this post, I will show you how to do Question Answering over a single table without any pre-processing. We will take a screenshot of the table and then use OpenAI’s vision model (gpt-4o and gpt-4o-mini) to generate answers using three prompting techniques: plain prompting, CoT (Chain of Thought) prompting, and CCoT (Constrained Chain of Thought) prompting.

Continue reading “Putting Constrained-CoT Prompting Technique to the Test: A Real-World Experiment”