Cursor, the AI-powered code editor that has transformed how developers write code, recently underwent a significant pricing overhaul that has sparked intense debate in the developer community. The changes reveal a fundamental challenge facing AI coding tools: how to fairly price services when underlying costs vary dramatically based on usage patterns.

The Old Pricing Model

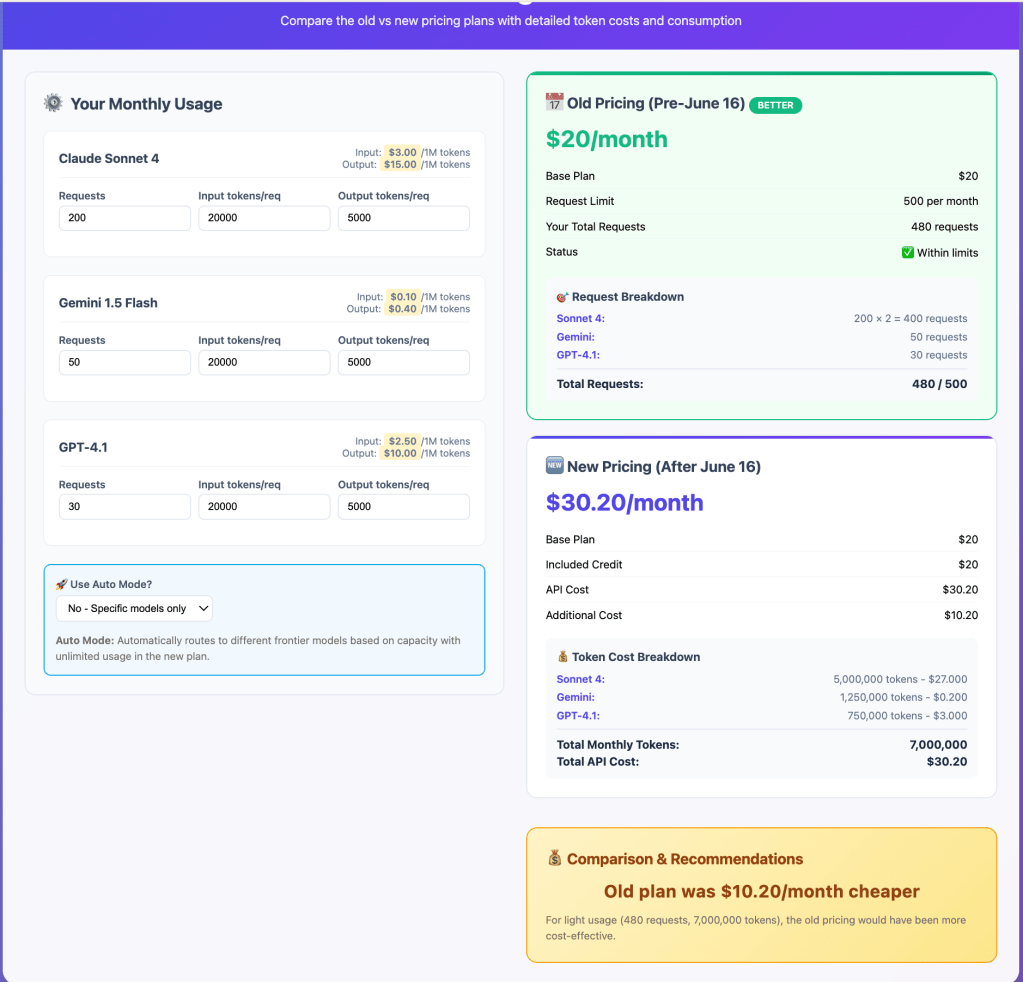

Previously, Cursor’s $20 per month Pro plan operated on a straightforward request-based system. Users received 500 requests monthly, with Claude Sonnet 4 consuming two request units due to its higher computational demands, while other models like GPT-4.1 and Gemini consumed just one unit each. This meant Pro users could make approximately 250 Claude Sonnet 4 requests per month.

While this pricing model was transparent and predictable, it failed to account for the reality of modern AI systems where token consumption varies wildly between requests. A simple code completion might use 100 tokens, while a complex refactoring task could consume 50,000+ tokens—yet both counted as a single “request” under the old system.

The New Pricing Model

On June 16, 2025, Cursor introduced a new pricing model that reflects actual API costs. The Pro plan now includes $20 of frontier model usage per month at API pricing, with an option to purchase additional usage at cost. For users who prefer unlimited usage, Cursor offers an “Auto” mode that automatically routes requests to different frontier models based on capacity.

As Cursor explained in their blog post: “New models can spend more tokens per request on longer-horizon tasks. Though most users’ costs have stayed fairly constant, the hardest requests cost an order of magnitude more than simple ones. API-based pricing is the best way to reflect that.”

Based on current API pricing, the $20 credit covers approximately 225 Claude Sonnet 4 requests, 550 Gemini requests, or 650 GPT-4.1 requests under typical usage patterns. However, with coding agents and complex context passing, actual costs can be significantly higher.

The Broader Lesson: Token Economics Matter

Cursor’s pricing evolution illustrates a critical principle for LLM-based products: token consumption patterns must drive pricing strategies. Input tokens often cost more than output tokens in real-world scenarios, making efficient context engineering essential for cost control.

For developers building products in the LLM landscape, this shift serves as a reminder that sustainable pricing requires understanding and reflecting actual usage costs. The days of flat-rate “unlimited” AI services may be numbered as providers grapple with the economic realities of rapidly advancing—and increasingly expensive—AI models.

Cost Calculator

You can explore the cost implications using our interactive Cursor pricing calculator to see how the pricing changes affect different usage patterns.

As you can see in the screenshot above, the old pricing model did not account for tokens, making it significantly cheaper than the new plan. When you’re using coding agents and passing in context, you often end up hitting token usage levels similar to what I’ve shown.

Discover more from Shekhar Gulati

Subscribe to get the latest posts sent to your email.