One of the most common use cases of LLMs is summary generation. I have worked on multiple systems where we have summarized different kinds of documents – word, pdf, text, web pages, call transcripts, and video transcripts. I am building Videocrawl where we generate summaries of video content. In almost all the summary use cases I have implemented, we have gone with a static summary prompt where we instruct the LLM to generate a summary in a specific format. In my recent work, I have been playing with the idea of giving some agency to the summarizer so that we can generate dynamic summarization prompts. In this short post, I will share my approach.

Let’s make it concrete. Let’s assume that we want to summarize Search R1 paper. This paper covers how we can train LLMs to reason and leverage search engines for reinforcement learning.

The first thing we do is we read the content of the paper. You can use any PDF reader to read the content.

Once we have read the content, we take the content of the first few pages (10-12). I usually take content of the first 12 pages and ask the LLM to generate a summarization prompt as shown below.

Instructions: Analyze the provided document and generate a customized summarization prompt specifically designed for this document.

PHASE 1: DOCUMENT ANALYSIS

- Identify the document type (academic paper, business report, legal document, etc.)

- Extract the main topic and key themes

- Analyze the document structure (sections, headings, organization pattern)

- Identify domain-specific terminology and concepts

- Note any unique features (data visualizations, case studies, arguments, methodologies)

PHASE 2: PROMPT ENGINEERING

Based on your analysis, create a detailed summarization prompt that:

1. Specifies exactly what elements should be extracted from THIS particular document

2. Defines the appropriate structure for summarizing THIS document

3. Includes document-specific questions that will elicit important insights

4. Provides instructions for handling the unique terminology and concepts in THIS document

5. Outlines how to preserve the document's specific logical flow and argument structure

PHASE 3: OUTPUT REQUIREMENTS

Your generated prompt should:

- Begin with "DOCUMENT-SPECIFIC SUMMARIZATION INSTRUCTIONS:" followed by a one-sentence description of what makes this document unique

- Include at least 3 document-specific extraction directives (e.g., "Extract the experimental methodology focusing on the novel [specific technique] described in sections 3.2-3.4")

- Provide clear organizational instructions based on the document's own structure

- Include 2-3 document-specific analytical questions

- Close with output format specifications tailored to this document's content

The generated prompt should be comprehensive enough that anyone following it would produce a summary that captures the unique essence of this specific document rather than a generic summary.

For the Search R1 paper, the LLM generated the following summarization prompt.

DOCUMENT-SPECIFIC SUMMARIZATION INSTRUCTIONS: This document is a research paper introducing the SEARCH-R1 model, a reinforcement learning framework that optimizes large language model interactions with search engines for reasoning tasks.

1. **Document Type and Unique Features:**

- Academic paper with a focus on reinforcement learning and large language models.

- Includes empirical results, methodologies, and technical terms specific to AI and machine learning.

2. **Topics and Key Themes:**

- Challenges of applying RL to LLM search interactions.

- Innovations of SEARCH-R1, including interleaved reasoning and search, and outcome-based rewards.

- Empirical performance evaluations across multiple datasets.

3. **Structure and Organization:**

- Abstract: Overview of objectives and findings.

- Introduction: Context and problem statement.

- Related Works: Background on LLMs and RL.

- Detailed sections on SEARCH-R1 design, experiments, and results.

- Conclusion: Summary and future directions.

4. **Extraction Directives:**

- Extract the key contributions of SEARCH-R1 outlined in the introduction and summary.

- Focus on the experimental methodology and results, particularly on how the model improves performance across datasets (Section 4).

- Highlight the challenges identified for applying RL to LLMs and how SEARCH-R1 addresses them (Section 3).

5. **Analytical Questions:**

- How does SEARCH-R1 differ from previous approaches in integrating search engines with LLMs?

- What are the comparative results of SEARCH-R1 versus other methods across the specified datasets?

6. **Terminology and Concepts:**

- Explain any domain-specific terms such as "Proximal Policy Optimization (PPO)" and "Generalized Advantage Estimation (GAE)" in a simplified manner.

7. **Logical Flow and Argument Structure:**

- Maintain the logical flow from problem statement to solution and results as presented in the paper.

- Ensure each experimental result is connected back to the contributions of the proposed method.

**Output Format:**

- Summary should begin with an overview of the research paper's objectives.

- Follow with sections summarizing the model's innovations, results, and conclusions.

- Include a brief glossary or explanation of technical terms where necessary.

- Conclude with insights on future research directions proposed by the authors.

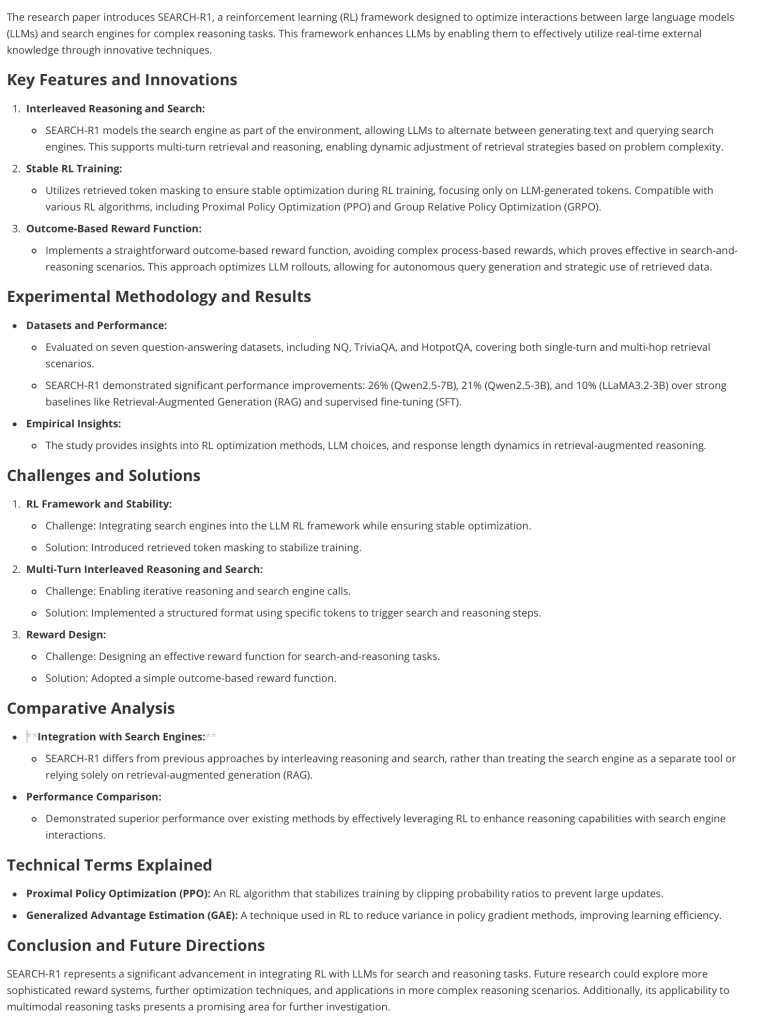

Now, when we ask the LLM to generate the summary using the above prompt, it generated the following summary.

Discover more from Shekhar Gulati

Subscribe to get the latest posts sent to your email.