In July 2022, I wrote a blog on Gitlab’s Postgres schema design. I analyzed the schema design and documented some of the interesting patterns and lesser-known best practices. If my memory serves me correctly, I believe I spent close to 20 hours spread over a couple of weeks writing the post. The blog post also managed to reach the front page of HackerNews.

I use large language models (LLMs) daily for my work. I primarily use the gpt-4o, gpt-4o-mini, and Claude 3.5 Sonnet models exposed via ChatGPT or Claude AI assistants. I tried the o1 model once when it was launched, but I couldn’t find the right use cases for it. At that time, I tried it for a code translation task, but it didn’t work well. So, I decided not to invest much effort in it.

OpenAI’s o1 series models are large language models trained with reinforcement learning to perform complex reasoning. These o1 models think before they answer, producing a long internal chain of thought before responding to the user.

Yesterday, I read a post on o1 by Ben Hylak. In the post, he explained how he initially thought o1 was garbage, but once he understood how to use it correctly, the o1 model started to deliver value for him.

I was using o1 like a chat model — but o1 is not a chat model.

If o1 is not a chat model — what is it?

I think of it like a “report generator.” If you give it enough context, and tell it what you want outputted, it’ll often nail the solution in one-shot.

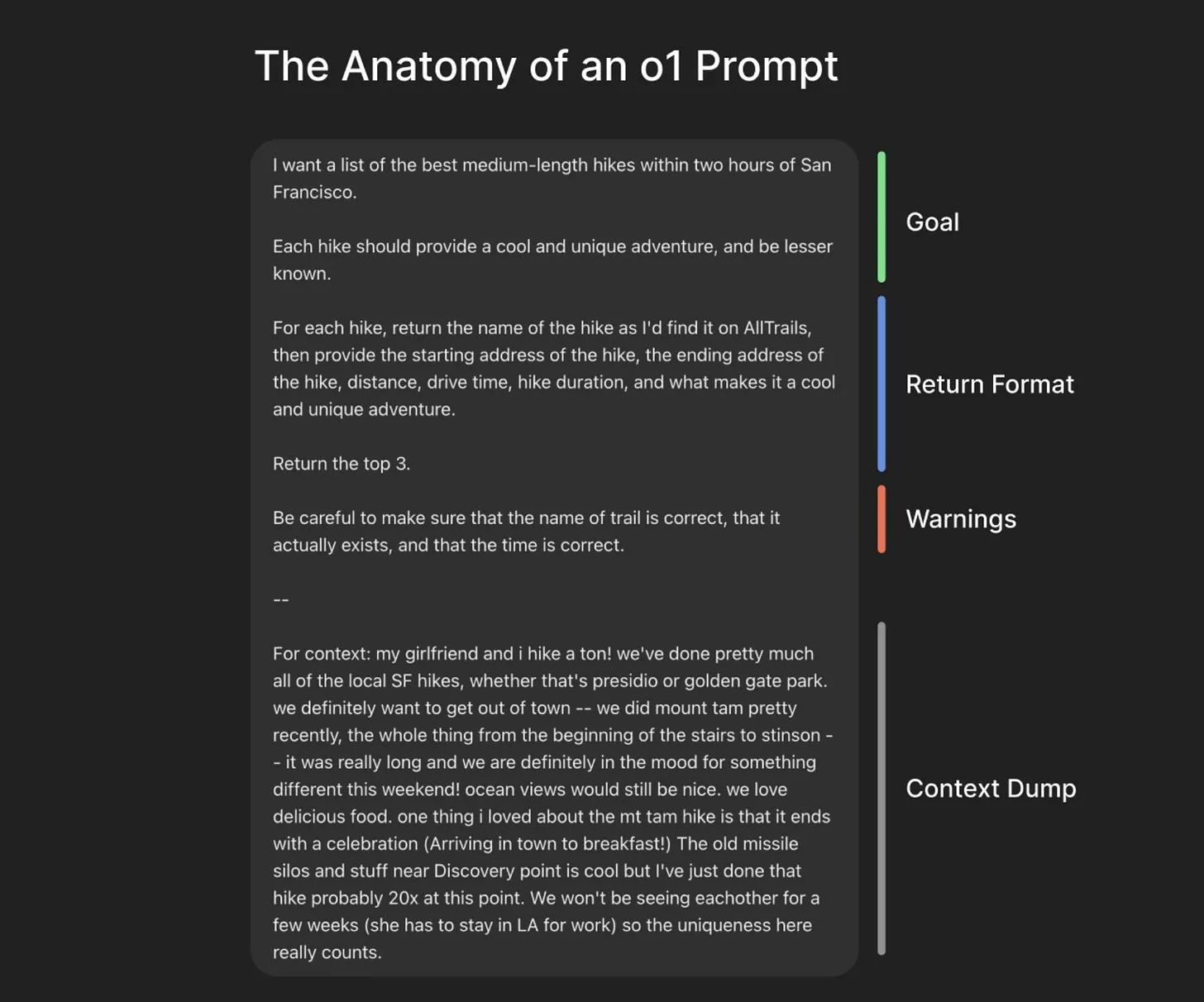

If you have used ChatGPT or Claude, you would have used them in an incremental, multi-turn conversation. This workflow does not work effectively with the o1 model. As Ben mentioned, with o1, you provide all the information in a single, one-shot input and let the model do its magic. He calls these inputs ‘briefs.’ He also shared an example of this approach.

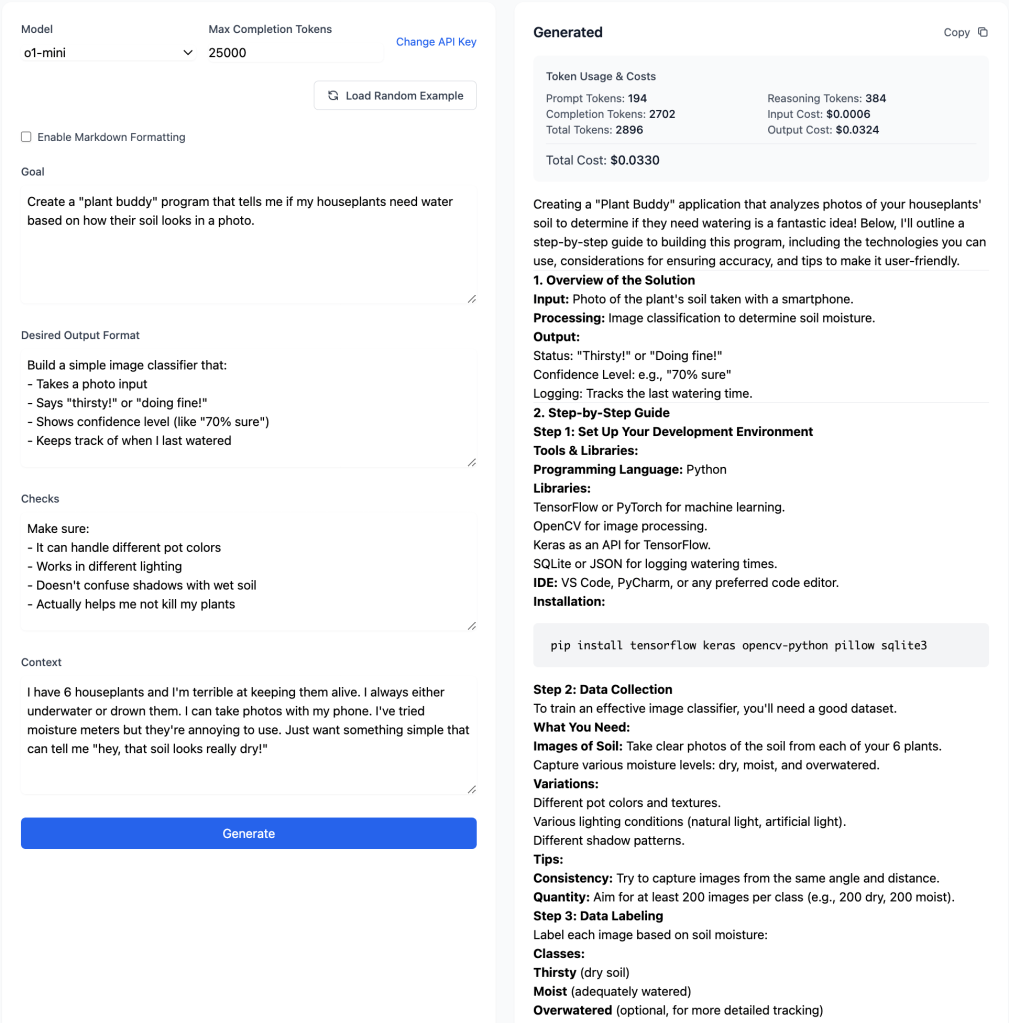

I created a web application using Claude that enforces this way of prompt building. You can access the application here: https://tools.o14.ai/o1-playground.html. It runs entirely client-side. You will be prompted first for your OpenAI API key, which will be stored in your browser’s local storage. Then, you can fill out the form and submit it to OpenAI. An example is shown below. You can view the complete response from the o1-mini model. here.

If you have been working with LLMs then you will know that is just good prompt engineering.

OpenAI documentation https://platform.openai.com/docs/guides/reasoning mention some interesting points about o1 model.

- OpenAI recommends reserving at least 25,000 tokens for reasoning and outputs when you start experimenting with these models. As you become familiar with the number of reasoning tokens your prompts require, you can adjust this buffer accordingly.

- Starting with

o1-2024-12-17, o1 models in the API will avoid generating responses with markdown formatting. To signal to the model when you do want markdown formatting in the response, include the stringFormatting re-enabledon the first line of yourdevelopermessage. - o1 model has following limitations:

- Available to Tier 5 customers only

- Streaming support not available in the REST API

- Parallel tool calls not yet supported

- Not available in the Batch API

- Currently unsupported API parameters:

temperature,top_p,presence_penalty,frequency_penalty,logprobs,top_logprobs,logit_bias

Now, let’s get to the main point of this post: Can the OpenAI o1 model analyze the SQL schema of GitLab?

GitLab’s schema is quite large, with 40,195 lines and 959 tables.

Since the OpenAI o1 context length is limited to 200,000 tokens for the o1 model and 128,000 tokens for the o1-mini model, the complete schema cannot be processed at once. Therefore, I only included the CREATE TABLE DDL statements. Even these 959 table DDLs consume 115,553 tokens.

I encountered an unexpected behavior when calling the model with the context and setting max_completion_tokens to 25,000. The OpenAI API returned an error:

This model's maximum context length is 128000 tokens. However, you requested 143800 tokens (118800 in the messages, 25000 in the completion). Please reduce the length of the messages or completion.

I initially believed that the 128,000 token limit is for input context and for output it is 65,536 tokens . However, it seems the 128,000 limit applies to the combined input and output. As far as I know, this differs from how other OpenAI models function. Please correct me if I’m mistaken.

For now in my o1 playground UI, the onus is on the user to stay within the context window. The UI will display an error if the limit is exceeded.

Key takeaway: With a large input context like this, the model won’t have enough tokens for reasoning and generating an output. To allocate 25,000 tokens for reasoning, we should restrict the input to around 100,000 tokens.

Now, let’s attempt our task. I want to see if o1 can identify the same patterns I discovered in my analysis.

Goal:

I want you to write detailed analysis report on GitLab postgres schema. It should cover useful lessons a developer can learn from a real world Postgres schema like GitLab.

Identify and analyze database design patterns and the problem the business problem are solving.

Output Format:

- Start by high level analysis of the schema

- List important database design patterns you see in the schema

- For each design decision

- there should be under separate heading

- Show examples with code snippets. Explain them using your knowledge of schema design best practices

- Suggest any improvements or alternative designs

In the context I passed first 784 tables to keep context under 100,000 tokens.

Below is the comparison of design patterns found by o1, o1-mini, and gpt-4o. You can access complete reports here. Below I have provided summary of the findings.

- o1 – https://gist.github.com/shekhargulati/7e261b3bfa23cd310a8fb7b084d49f98

- o1-mini – https://gist.github.com/shekhargulati/c78951b1224d94f2f1fc75965623549c

- gpt-4o – https://gist.github.com/shekhargulati/29fef8366a7608ee26c8f14a8596d06f

| o1 | o1-mini | gpt-4o |

|---|---|---|

| Partitioning Strategies – Range, List, Hash | Table Partitioning | Partitioning |

| Check constraints for data validation | Use of Constraints and Check Constraints | ❌ |

| Usage of bigint primary keys | Not covered | ❌ |

| JSON/JSONB for flexible data | Use of Array and JSONB Data Types | Use of JSONB for Flexible Data |

| Timestamps & History tracking | Audit and Event Logging | ❌ |

| Optimistic concurrency using lock_version | ❌ | ❌ |

| Multi-tenancy using namespace/parent references | ❌ | Inheritance and Hierarchical Design |

| Migration tables | ❌ | ❌ |

| Many to many associations | ❌ | ❌ |

| ❌ | Soft Deletion and Historical Tracking | ❌ |

| ❌ | Use of Default Values and Enumerations | ❌ |

| ❌ | Foreign Key Relationships and Referential Integrity | ❌ |

Below is the comparison of improvements found by o1, o1-mini, and gpt-4o.

| o1 | o1-mini | gpt-4o |

|---|---|---|

| Foreign-Key Constraints vs. Application-Level Enforcement | ❌ | ❌ |

| JSON Growth Management | Optimizing JSONB Usage | |

| Further Partition Pruning Config | ❌ | Enhanced Partition Management |

| Strict Enforcement of Data Types | ❌ | ❌ |

| More Comprehensive Soft-Delete Patterns | Implementing Soft Deletion Consistently | ❌ |

| ❌ | Comprehensive Use of Enumerations | ❌ |

| ❌ | Refining Access Control and Authorization | ❌ |

| ❌ | Improving Historical Data Tracking | ❌ |

Most of the improvements suggested by gpt-4o were not relevant in context of the task. It suggested use of Foreign Data Wrappers, Caching solutions, etc.

Now, let’s compare the token usage and cost.

| o1 | o1-mini | gpt-4o | |

|---|---|---|---|

| Prompt Tokens | 96894 | 99419 | 115716 |

| Completion Tokens | 2483 | 3930 | 1200 |

| Total Tokens | 99377 | 103349 | 116916 |

| Reasoning Tokens | 448 | 384 | 0 |

| Input Cost | $1.4534 | $0.2983 | $0.2893 |

| Output Cost | $0.1490 | $0.0472 | $0.0120 |

| Total Cost | $1.6024 | $0.3454 | $0.3013 |

- Looks like o1,o1-mini have different tokenizer compared to gpt-4o.

- o1-mini and gpt-4o costed almost same on this task

- o1 is roughly 5 times expensive than o1-mini

The o1model generated the best conclusion.

Taken as a whole, GitLab’s Postgres schema showcases enterprise-level design decisions optimized for scaling writes, queries, and data retention for hundreds of thousands (or more) of concurrent users. Key takeaways:

• Partition thoroughly where data grows rapidly (logs, events, ephemeral data).

• Validate data integrity with check constraints and bigint PK columns.

• Distinguish flexible fields with JSON if storing schema-less data.

• Keep track of concurrency (lock_version) and tenancy (namespace_id, etc.).

• Consider partial or no foreign keys for performance in high-scale, frequently migrating systems—but weigh the trade-offs carefully.

• Introduce consistent naming, time fields (created_at, updated_at, etc.), and possibly “deleted_at” for soft deletes.All of these patterns—and the fact that the schema is still evolving—demonstrate how scaling application requirements shape a robust, partitioned, check-constrained schema design that can handle both OLTP usage and large-scale data ingestion in a single Postgres database.

It cost me roughly $10 in OpenAI credits to run experiments for this blog.

Overall, I am happy with the results of the OpenAI o1 model. While it did not cover all the points I covered in my post, it addressed the important ones. I am not certain if this is the ideal task for the o1 model, but I believe it is, as it requires reasoning to perform this analysis. I wish the OpenAI API provided users with access to the reasoning chain of thoughts.

Discover more from Shekhar Gulati

Subscribe to get the latest posts sent to your email.