Building AI assistants using Retrieval Augmented Generation (RAG) taught me valuable lessons about user expectations and technical challenges. Here’s what I discovered along the way.

1. The Challenge of Multiple Assistants

Users often struggle when dealing with multiple AI assistants. They frequently ask questions to the wrong assistant or expect a single assistant to handle everything. We solved this by creating specific URLs for each assistant and adding clear chat placeholders to show which assistant they’re talking to. We also implemented role-based access control (RBAC) and a central homepage to help users navigate between assistants.

2. The ChatGPT Comparison

Users naturally compare any AI assistant with ChatGPT. They expect similar features like handling thank you messages, follow-up questions, and list-based queries. We enhanced our RAG implementation (RAG++) to better match these expectations.

3. Managing Conversations

Single-conversation interfaces create several challenges. Long conversations slow down page loading and can affect answer accuracy. Users rarely organize their chats effectively. We addressed this by:

- Implementing automatic context management

- Setting conversation history limits

- Creating automatic chat organization features

4. Real-Time Information Access

Users want current information and often fear missing out. They still turn to search engines for real-time updates. To address this, we integrated search APIs and added an explicit search mode similar to ChatGPT’s browsing feature.

5. Setting Clear Boundaries

Users often don’t understand what RAG-based assistants can and cannot do. This leads to questions outside the assistant’s capabilities and mismatched expectations. Clear communication about limitations helps manage these expectations.

6. Handling I don't know answers

In RAG applications if you are unable to answer then you show some variant of I don't know in the assistant response. Users gvae us feedback they dislike when assistants say “I don’t know.” . We solved this by showing them something useful. For example if a user asked give me case study on unified visa platform then we showed following answer.

I couldn't find a specific case study on a unified Visa platform in the provided context. However, for related insights on payment systems and financial services integration, you might find the following case studies relevant:

- Case Study 1

- Case Study 2

7. Improving Question Quality

Many users struggle to ask effective questions. We helped by:

- Generating follow-up questions

- Implementing query rewriting

- Teaching basic prompt engineering skills

8. Knowledge base Management

Real-time document indexing in RAG applications is a common user expectation. We found it helpful to for each assistat:

- Display knowledge base statistics

- Show when kb indexes were last updated

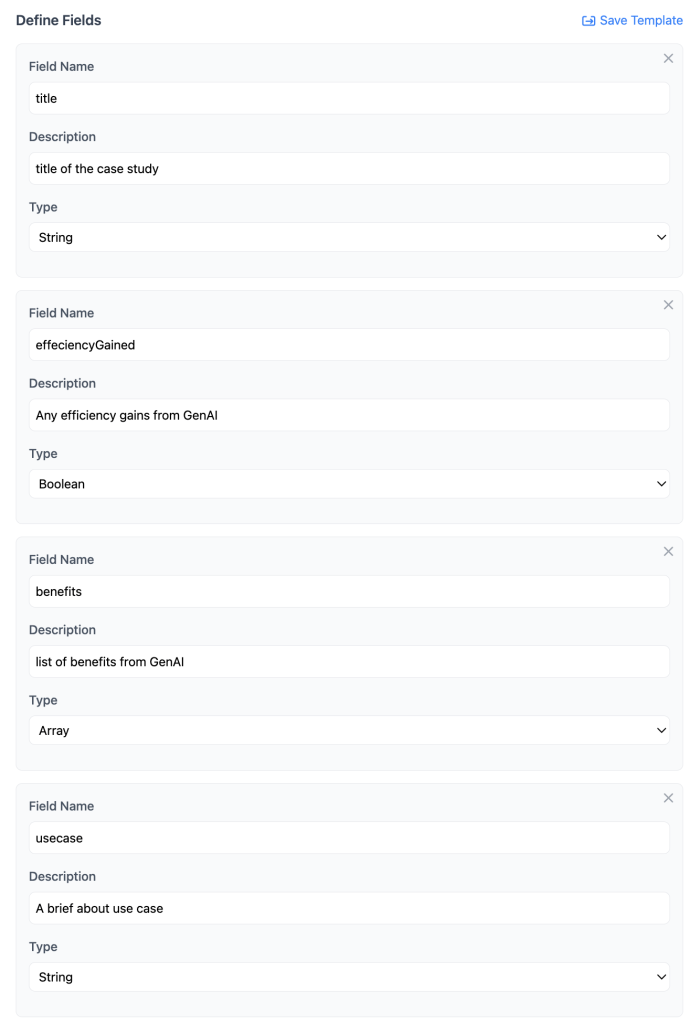

- Provide document filtering options when making search queries. We extract a metadata from documents during indexing

9. Interface Improvements

Small UI features made a big difference. I have not seen these features in any public assistant like ChatGPT or Claude.

- Adding conversation statistics. This include number of queries in a conversation and feedback analysis

- Show metadata for each message like token count, time to first token, token/sec, total time to generate answer

- Showing query timestamps

- Replaying an entire conversation

- Supporting multiple tabs and split windows

- Regenerate with and without history

- Giving editor mode along with the chat mode.

These lessons continue to shape how we build and improve our RAG-based assistants. Understanding user needs and expectations helps create more effective AI tools.