I recently came across an insightful article by Drew Breunig that introduces a compelling framework for categorizing the use cases of Generative AI (Gen AI) and Large Language Models (LLMs). He classifies these applications into three distinct categories: Gods, Interns, and Cogs. Each bucket represents a different level of automation and complexity, and it’s fascinating to consider how these categories are shaping the AI landscape today.

Continue reading “Gods, Interns, and Cogs: A useful framework to categorize AI use cases”Month: October 2024

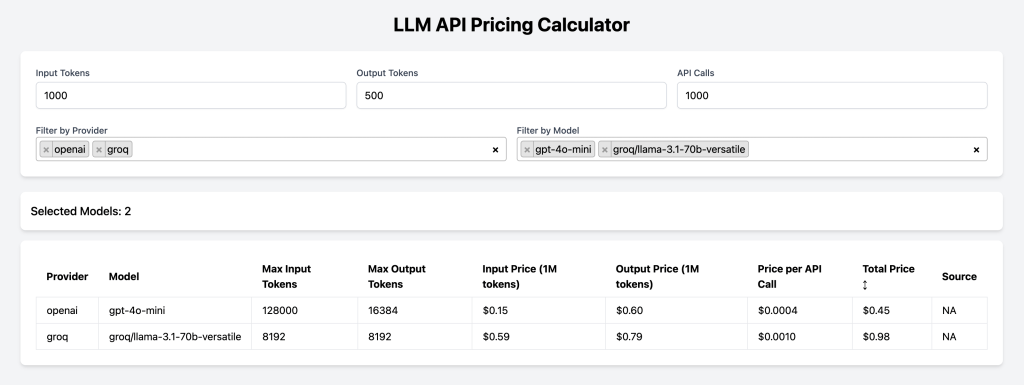

LLM API Pricing Calculator

I’ve recently begun building small HTML/JS web apps. These are all client-side only. Most of these tools are initially generated using ChatGPT in under 30 minutes. One example is my LLM API price calculator (https://tools.o14.ai/llm-cost-calculator.html), which I generated. While there are other LLM API pricing calculators available, I build/generate these tools for several reasons:

- Known User Base: I have a need for the tool myself, so I already have the first user.

- Rapid Prototyping: Experimenting with the initial idea is frictionless. Building the first version typically takes only 5-8 prompts.

- Focus on Functionality: There’s no pressure to optimize the code at first. It simply needs to function.

- Iterative Development: Using the initial version reveals additional features needed. For instance, the first iteration of the LLM API pricing calculator only displayed prices based on the number of API calls and input/output tokens. As I used it, I realized the need for filtering by provider and model, so I added those functionalities.

- Privacy: I know no one is tracking me.

- Learning by Doing: This process allows me to learn about both the strengths and limitations of LLMs for code generation.

The LLM API pricing calculator shows the pricing of different LLM APIs as shown below. You can look at the source code by using browser view source.

Webpage: https://tools.o14.ai/llm-cost-calculator.html

The prompt that generated the first version of the app is shown below. I also provided an image showing how I want UI to look.

We want to build a simple HTML Javascript based calculator as shown in the image. The table data will come from a remote JSON at location https://raw.githubusercontent.com/BerriAI/litellm/refs/heads/main/model_prices_and_context_window.json. The structure of JSON looks like as shown below. We will use tailwind css. Generate the code

{

"gpt-4": {

"max_tokens": 4096,

"max_input_tokens": 8192,

"max_output_tokens": 4096,

"input_cost_per_token": 0.00003,

"output_cost_per_token": 0.00006,

"litellm_provider": "openai",

"mode": "chat",

"supports_function_calling": true

},

"gpt-4o": {

"max_tokens": 4096,

"max_input_tokens": 128000,

"max_output_tokens": 4096,

"input_cost_per_token": 0.000005,

"output_cost_per_token": 0.000015,

"litellm_provider": "openai",

"mode": "chat",

"supports_function_calling": true,

"supports_parallel_function_calling": true,

"supports_vision": true

},

}

This API pricing calculator is based on pricing information maintained by LiteLLM project. You can look at all the prices here https://github.com/BerriAI/litellm/blob/main/model_prices_and_context_window.json. In my experience the pricing information is not always latest so you should always confirm the latest price from the provider pricing page.

Monkey patching autoevals to show token usage

I use autoevals library to write evals for evaluating output of LLMs. In case you have never written an eval before let me help you understand it with a simple example. Let’s assume that you are building a quote generator where you ask a LLM to generate inspirational Steve Jobs quote for software engineers.

Continue reading “Monkey patching autoevals to show token usage”