I enjoy reading books on Oreilly learning platform https://learning.oreilly.com/ . For the past month, a new feature on the Oreilly platform called “Answers” has been staring me down, and I haven’t been tempted to click it. Maybe it’s LLM fatigue, or something else I just didn’t give it a try. I do use LLM tools daily but most of these tools I have designed for myself around my workflows.

Today, I decided to give it a try. If you go to a book page like the one I am reading currently https://learning.oreilly.com/library/view/hands-on-large-language/9781098150952/ you will see Answers icon in the right side bar.

When you click on Answers it will show a standard Chat input box and suggestions. We all have seen them million times by now.

It looks like a standard Retrieval Augmented Generation (RAG) use case. When you ask a question it will search in its knowledge base(some sort of Vector/Hybrid search) and then generate the answer.

Having built production ready RAG systems I know the compexity involved in each of the RAG steps. It takes a lot of effort to go from Naive RAG system to a production ready RAG system. I have written multiple posts covering these issues. I am also building a course where I will cover in step by step manner on how to build production ready RAG application. You can register now and get 50% discount. Register using form – https://forms.gle/twuVNs9SeHzMt8q68

So today I finally decided to give Answers feature a try and I am disappointed with it. It looks like a poor implementation of RAG.

Let me share what I did. I am reading Hands-On Large Language Models book.

I was reading Chapter 8 Semantic Search and RAG. The last section of the chapter covers Advanced RAG Techniques. Since I was already reading about the topic, I expected the answer to primarily focus on the techniques mentioned in the chapter.

what are advanced RAG techniques

I was expecting that it would show me the answer from the book I am reading. It can add extra information from other books but since the application already knows the book and chapter I am reading I was expecting that it should have listed the techniques mentioned in the chapter first. The answer I got from Answers feature is shown below.

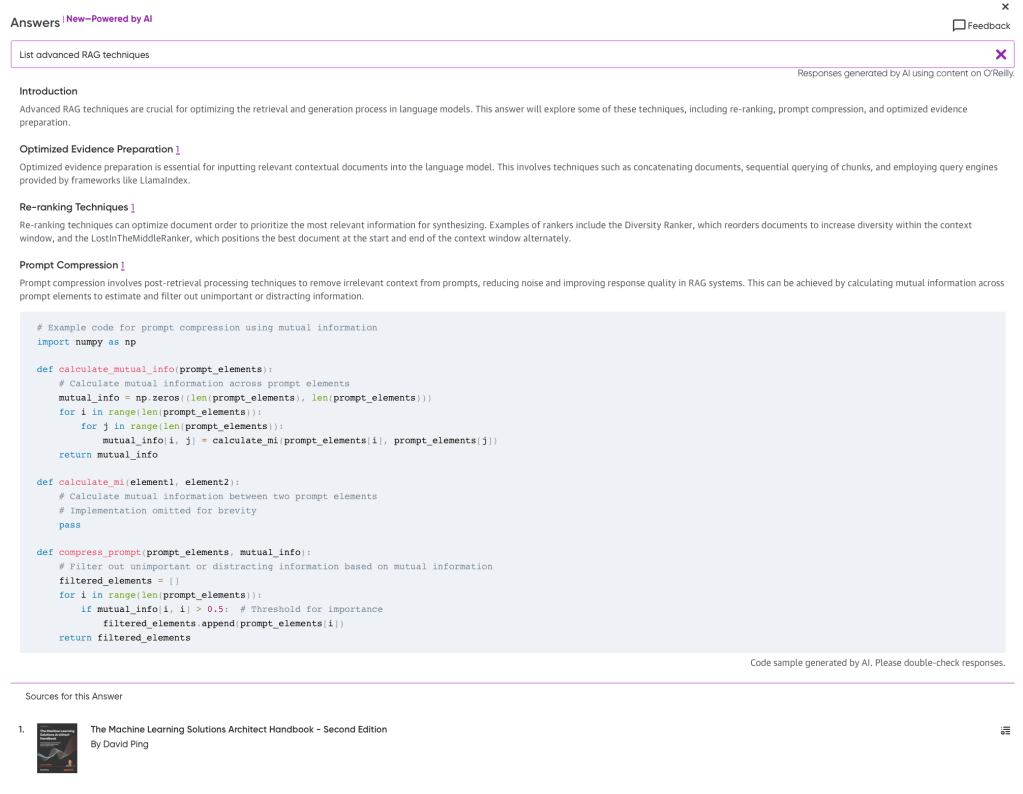

I thought it might help if I change my query to List advanced RAG techniques

The answer was better compared to the first one but again it does not list Advanced Queries listed in Chapter 8 of the book I am reading.

I asked another question list the different memory types in LLM applications where again it listed an incomplete answer from another book. [Building Generative AI-Powered Apps: A Hands-on Guide for Developers](https://learning.oreilly.com/library/view/-/9798868802058)

Next, I asked question mentioned below and for all the questions I got the answer O'Reilly Answers doesn't cover that. What O'Reilly topics would you like to learn more about?.

- Summarize the content of the chapter I am reading

- which book should I read after this book

- who is the author of the book

- list similar books to the current

Playing with Answers feature for 30 mins I see the following issues:

Issue 1: No visibility to the user on the context they are using to generate the answer. Like I said as a user of this feature I was expecting the book I am currently reading will be used as the context source but as I showed above it is not the case.

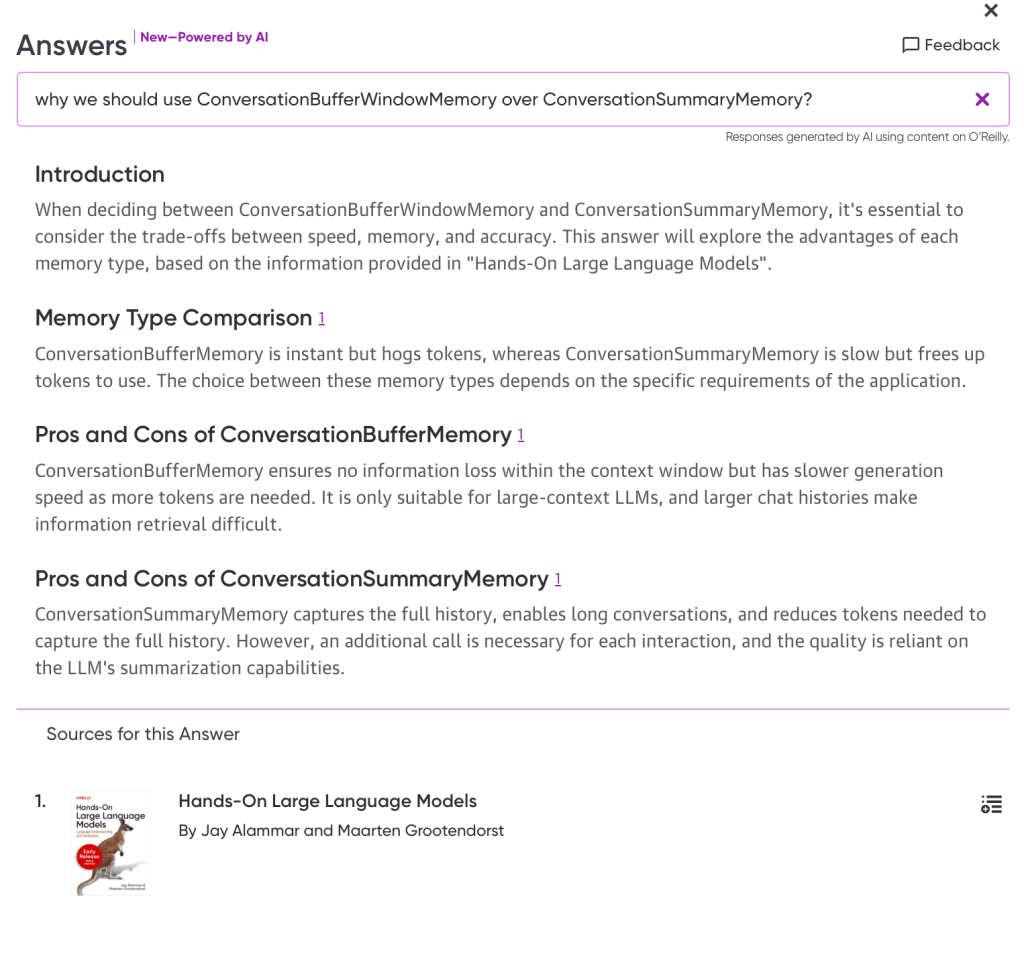

Issue 2: Inaccurate answers. It compared ConversationBufferMemory and ConversationSummaryMemory, while I asked for ConversationBufferWindowMemory and ConversationSummaryMemory.

Issue 3: They don’t clearly mention limitations of the system. Summarization is the most basic task someone would like to perform.

Issue 4: I don’t think they are doing query rewriting which is essential for most RAG applications.

Issue 5: I don’t think they are doing metadata based filtering

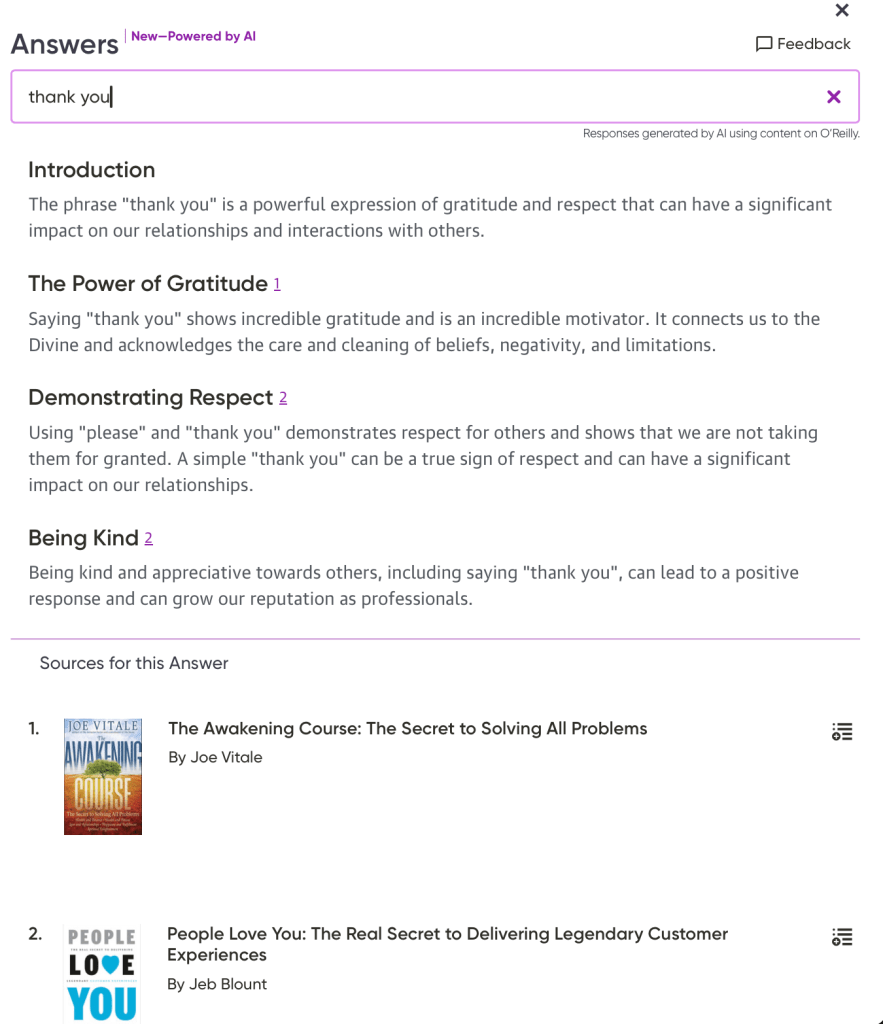

Issue 6: Not handling messages like thank you. The system currently processes messages like ‘thank you’ using RAG and attempts to generate a response.

Issue 7: They should offer users the option to limit the search to specific documents. A simple toggle button could be implemented, where the ‘off’ state restricts the search to the current book and the ‘on’ state searches the entire index

Issue 8: It does not support multi-turn conversations. This is something all users expect from day 1. Right now it is single turn generative search experience.

While my exploration of O’Reilly Answers revealed room for improvement, I remain optimistic about its potential. My main use case for LLMs around learning, research and summarization so I think this feature can add value. The ability to seamlessly access relevant information from the book you’re reading, along with supplementary resources, would be a game-changer for knowledge seekers.

Discover more from Shekhar Gulati

Subscribe to get the latest posts sent to your email.