I am building and operating a ChatGPT like enteprise AI-Assistant for a year. We log all the user queries in a database for future analysis and building personalized features. We have seen its usage grow over time and it is becoming difficult for our small team(4) to use manual quality analysis methods like eye balling and vibe checks to understand system accuracy and usage patterns.

In this quick post I will cover how we can use BERTopic and OpenAI gpt-4o-mini model to cluster user queries into labelled groups. We will run this analysis on Chatbot Arena dataset.

This dataset contains 33K cleaned conversations with pairwise human preferences. It is collected from 13K unique IP addresses on the Chatbot Arena from April to June 2023. Each sample includes a question ID, two model names, their full conversation text in OpenAI API JSON format, the user vote, the anonymized user ID, the detected language tag, the OpenAI moderation API tag, the additional toxic tag, and the timestamp.

BERTopic is an open-source project offers a novel approach to topic modelling. Topic modelling is an unsupervised and exploratory approach to make sense of bunch of documents.

BERTopic leveraging the power of BERT, a state-of-the-art language model, and c-TF-IDF ( a variation of the traditional TF-IDF (Term Frequency-Inverse Document Frequency) algorithm designed to work with multiple classes or clusters of documents), BERTopic helps uncover hidden thematic structures within your text data. This approach assumes that documents grouped by semantic similarity can effectively represent a topic, where each cluster reflects a major theme and the combined clusters paint a broader picture.

To install BERTopic, you can execute following pip command

pip install bertopic

We will use OpenAI to come up with better textual names for labels

pip install openai

BERTopic topic modelling pipeline consists of two steps – clustering and topic representation.

Clustering Topic Representation

_____________ ____________________

| | | |

| SBERT | | CountVectorizer |

|_____________| |____________________|

| | | |

| UMAP | | c-TF-IDF |

|_____________| |____________________|

| |

| HDBSCAN |

|_____________|

In the clustering step of the pipeline

- Firstly, documents are first embedded using an text embedding model. The default model is SentenceTransformer

all-MiniLM-L6-v2 - Next, Uniform Manifold Approximation and Projection (UMAP) is used for dimension reduction. Dimension reduction is done to reduce overfitting, improve generalizability, reduce computation complexity of the models

- Finally, HDBSCAN is used to uncover clusters in datasets based on the density distribution of data points.

Let’s now look at how we can topic modelling on Chatbot Arena dataset.

We will start by loading the dataset using HuggingFace datasets library.

from datasets import load_dataset

dataset = load_dataset("lmsys/chatbot_arena_conversations")

We will filter out Non-English languages and extract only the question part of the row.

training_dataset = dataset['train']

english_dataset = list(filter(lambda x: x['language'] == 'English', training_dataset))

questions = list(map(lambda c: c['conversation_a'][0]['content'], english_dataset))

You can look at the first five questions

questions[:5]

['What is the difference between OpenCL and CUDA?',

'Why did my parent not invite me to their wedding?',

'Fuji vs. Nikon, which is better?',

'How to build an arena for chatbots?',

'When is it today?']

After filtering, we are left with 29,206 questions.

Now, we will use BERTopic and OpenAI API to cluster topics.

from bertopic import BERTopic

from bertopic.representation import OpenAI

from openai import OpenAI as OpenAIClient

from hdbscan import HDBSCAN

client = OpenAIClient()

hdbscan_model = HDBSCAN(min_cluster_size=10, metric='euclidean', cluster_selection_method='eom', prediction_data=True)

# OpenAI Representation Model

representation_model = OpenAI(client=client, model="gpt-4o-mini", chat=True)

# Use the representation model in BERTopic on top of the default pipeline

topic_model = BERTopic(representation_model=representation_model, hdbscan_model=hdbscan_model)

topics, probs = topic_model.fit_transform(questions)

topic_modelis the model that we have trained and contains information about the model and the topics that we created.topicsare the topics for each question.probsare the probabilities that a topic belongs to a certain question.

The above code train the model. It will take close to 10mins for the code to execute. You can also save the model and load it for later use.

from bertopic import BERTopic

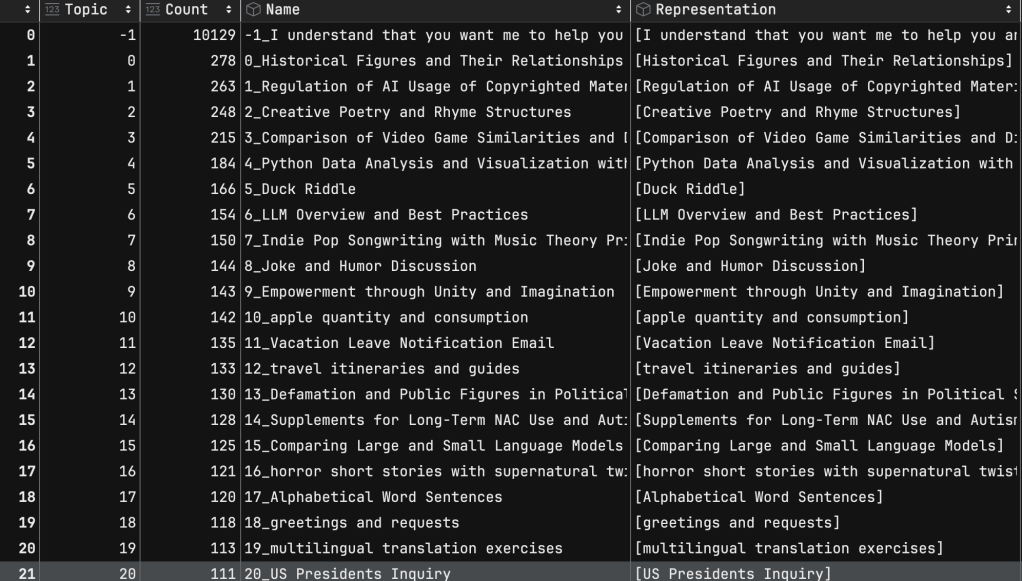

topic_model = BERTopic.load("<<name of the model>>")You can look at all the topic information using the topic_model.get_topic_info(). This gives you a dataframe with topic details. Below are the first twenty topic clusters.

Each topic has an int id and Name. For example, topic 1 has name 0_Historical Figures and Their Relationships. We can see that there are 278 questions that were on this topic. I was not expecting that there will be 166 instances of Duck Riddle.

The topic with id -1 is outliers. These are all the questions that couldn’t be clustered. I was not expecting that close to 1/3 of the questions are outliers. As mentioned in the docs, this is expected with HDBSCAN algorithm. You can reduce outliers and reassign them to existing topics using topic_model.reduce_outliers() method. Please refer to docs for more information.

In the screenshot above I only showed the first twenty topics. I have created a gist that has all the 631 topics created by BERTopic. You can refer to gist here.

It costed me around 10 cents for OpenAI gpt-4o-mini model usage.

Grouping 20k queries manually is a lot of work. BERTopic is a quick way to understand what kind of topics people are exploring with your AI assistant. Then, you can focus on specific topics and improve them in a systematic manner.

If you want to look at representative documents that are part of one topic the you can use topic_model.get_representative_docs(1) . For topic 1 Regulation of AI Usage of Copyrighted Materials we can see following three questions.

['write a law as clear and simple as possible to regulate the usage of copyrights by AI companies\n\nTitle: Artificial Intelligence and Copyright Usage Act\n\nSection 1: Purpose and Intent\n\nThis Act aims to regulate the usage of copyrighted materials by artificial intelligence (AI) companies, ensuring protection of intellectual property rights, promoting innovation, and providing clarity on liability and ownership of AI-generated content.\n\nSection 2: Definitions\n\n(a) "AI Company" refers to any organization or entity engaged in the development, distribution, or commercialization of artificial intelligence systems, software, or services.\n\n(b) "Copyrighted Material" refers to any original work of authorship that is protected under copyright law.\n\n(c) "AI System" refers to any machine, software, or algorithm capable of processing data or learning from data to generate content, make decisions, or perform tasks without explicit human intervention.\n\n(d) "AI-Generated Content" refers to any work or output created or produced by an AI System.\n\nSection 3: Usage of Copyrighted Material\n\n(a) AI Companies shall obtain proper licenses or permissions for the use of Copyrighted Material in the development, training, or operation of AI Systems.\n\n(b) AI Companies shall ensure that the usage of Copyrighted Material falls within the scope of the licenses or permissions obtained and complies with applicable copyright laws and regulations.\n\nSection 4: Ownership and Liability of AI-Generated Content\n\n(a) AI-Generated Content shall be consi',

'What is ai ?',

'what is AI?']

If you want to look at all the documents in a topic then you will have to write custom code.

topic_questions = {topic: [] for topic in set(topics)}

for topic, question in zip(topics, questions):

topic_questions[topic].append(question)

Now, you can query topic_question dictionary.

topic_questions[6][0:10]

['What is the best way to extract an ontology from a LLM?',

'Explain the uses of DMT',

'What are the most important xgboost hyperparameters?',

'I have a meeting in 6 minute but instead I am playing with LLM. Halp !',

'why llms always have a name related to lama?',

'introduce the auto_LLM',

'how to teach LLM using tools?',

'Im testing you against another LLM, why are you better?',

'please provide a detailed step by step guide on how to fine-tune an existing llm model on an individual person including biography, manner of speech and further individual properties?',

'please provide a detailed step by step guide on how to fine-tune an existing llm model on an individual person including biography, manner of speech and further individual properties?']

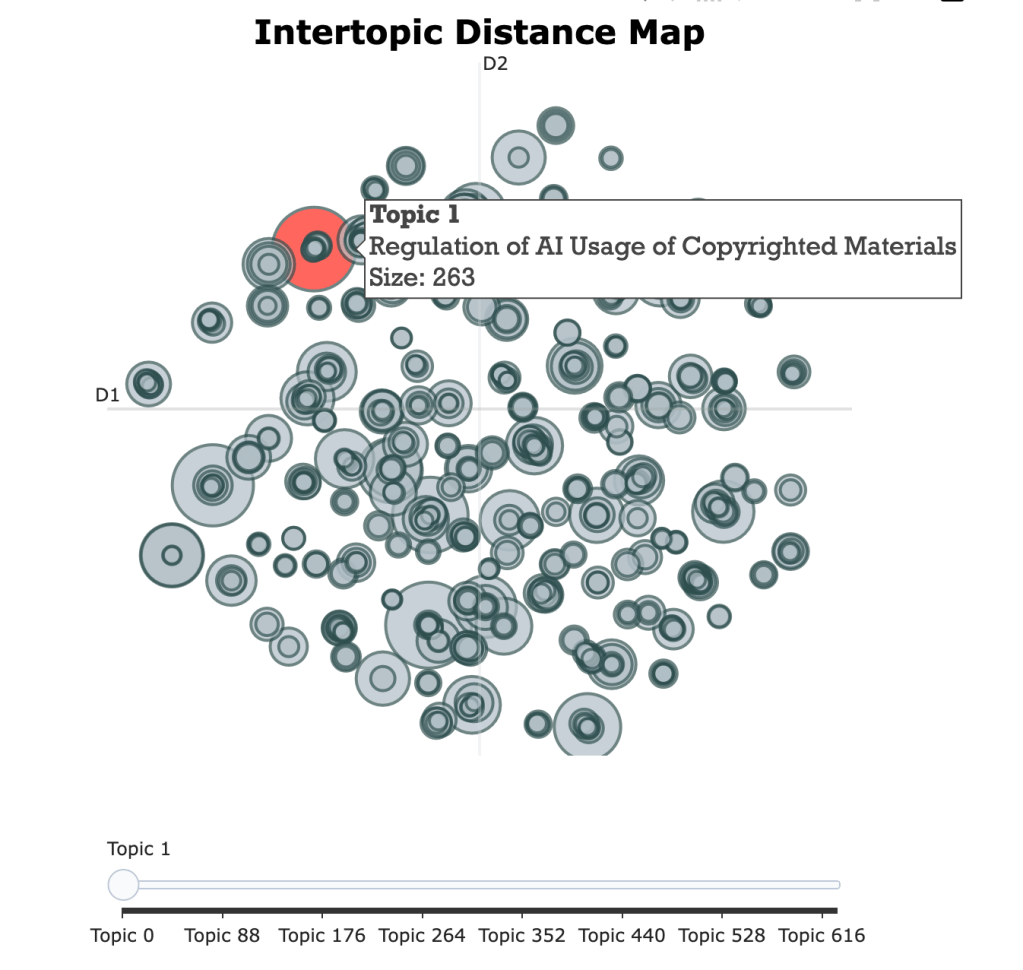

You can also visualize the topics for better analysis.

topic_model.visualize_topics()

We can also find topics that are similar to a certain topic. For example, if I want to find topics that are discussing about “Sports” then I can use find_topics method.

similar_topics, similarity = topic_model.find_topics("Sports", top_n=3)This give us three topics and we get more information about these topics using the code below.

for t in similar_topics:

print(topic_model.get_topic(t))The above code will print three topics that are discussing about Sports

[('Youth Soccer Engagement and Comparison to Football', 1)]

[("Men's High Jump and Notable Sports Achievements", 1)]

[('Music, Sports, and Tech Enthusiast', 1)]That’s it for today.

I am building a course on how to build production apps using LLMs. We will cover topics like prompt engineering, RAG, search, testing and evals, fine tuning, feedback analysis, and agents. You can register now and get 50% discount. Register using form – https://forms.gle/twuVNs9SeHzMt8q68

Discover more from Shekhar Gulati

Subscribe to get the latest posts sent to your email.